AI in Production: Opportunities & Risks — An Interview with Iris Phan

Artificial intelligence has become a central pillar of modern industrial transformation. Across manufacturing environments, AI in production is reshaping how companies automate processes, ensure quality, optimize resources, and prevent downtime. Yet alongside these opportunities, important questions arise: How should AI be used responsibly? How do we balance efficiency with ethics? What are the risks of uncontrolled AI development?

To explore these topics, the following text presents an expanded, analysis-driven article based on an interview with Iris Phan, a fully qualified lawyer specializing in IT law, data protection, ethics, and automated systems. She has worked for over six years at the computer center of Leibniz University Hannover and teaches philosophy of science at the university’s Institute of Philosophy. Her perspectives offer valuable insight into the challenges and responsibilities that accompany AI in production.

This article merges her statements with broader context and expert commentary to provide a comprehensive overview of opportunities and risks associated with AI in industrial environments.

Introduction to the Expert Perspective

Iris Phan’s professional background spans law, data protection, IT governance, and philosophy of science. Her interdisciplinary expertise is particularly relevant for AI in production because industrial AI systems interact with complex ethical, legal, and operational frameworks. As manufacturing companies adopt automated processes, predictive analytics, and machine-learning tools, the demand for clear legal and ethical guidance continues to grow.

Her interview highlights the importance of transparency, data protection, and responsible development as key principles guiding the use of AI in production.

AI in Production: Is Industry Ready for It?

One of the first questions posed in the interview concerns the suitability of AI for industrial environments. According to Phan, much of the public debate frames AI in terms of “intelligence” in the human sense, often leading to unrealistic expectations or fears of replacement. In reality, AI in production focuses on supporting workers, improving safety, and automating tasks that are repetitive, dangerous, or physically demanding.

Automation is not a new phenomenon. Over many decades, machines have replaced manual labor in high-risk or low-precision tasks. What distinguishes AI-based automation today is the growing ability to analyze complex data patterns and respond dynamically to operational changes. AI in production enhances human capabilities rather than replacing them, enabling workers to shift from manual oversight to more meaningful supervisory and strategic roles.

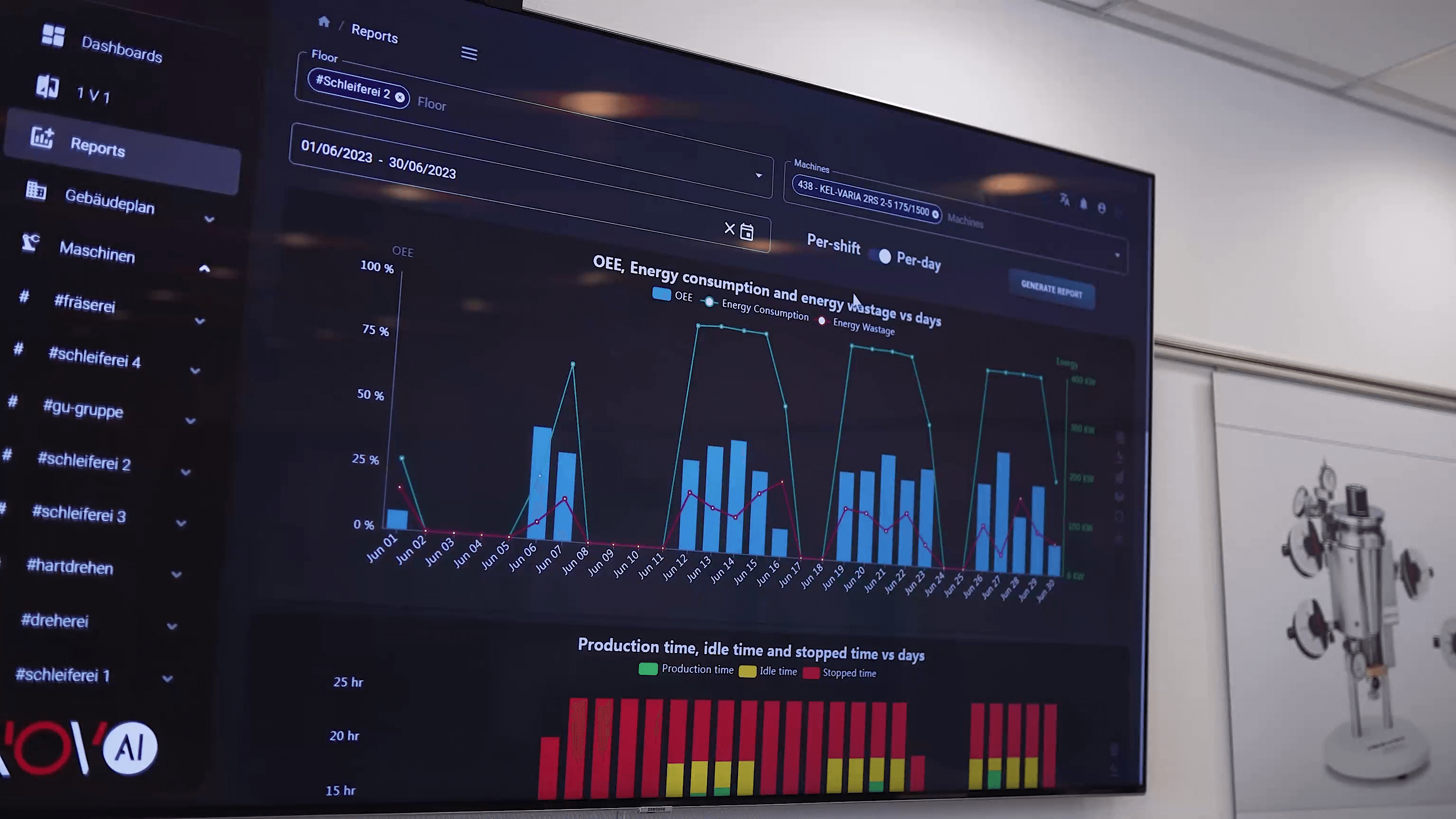

Manufacturing companies increasingly rely on intelligent systems to monitor machine states, optimize workflows, reduce material waste, and improve predictability in operations. This makes AI not only suitable but essential for modern industry.

Environmental Impact and Sustainability Through AI in Production

The interview emphasizes that the environmental effect of AI depends on how and where it is applied. When used thoughtfully, AI in production offers clear ecological benefits. Predictive maintenance is a strong example: by forecasting failures before they occur, companies avoid producing excess waste or discarding materials prematurely. AI-supported procurement reduces unnecessary manufacturing of spare parts and aligns production more closely with actual demand.

Through predictive analytics, manufacturers can also improve energy efficiency, optimize machine loads, and reduce emissions. Many sustainability-focused initiatives from organizations like the OECD AI Policy Observatory highlight how data-driven tools support greener industrial operations.

AI in production, when applied responsibly, contributes to lower resource consumption and more sustainable manufacturing cycles.

Can the Development of AI Be Controlled?

A common misconception about AI is the belief that its development is entirely uncontrollable due to the “black box” nature of machine-learning processes. Phan challenges this idea by explaining that political frameworks, societal expectations, research funding, and regulatory agendas all influence the direction of AI development. While absolute control is impossible, governance mechanisms can guide the use and design of AI systems.

For example, the EU’s ongoing regulatory efforts, ethical guidelines, and data protection standards demonstrate how democratic institutions shape the evolution of AI in production. These frameworks aim to ensure responsible innovation while preventing misuse.

The European Data Protection Board (EDPB) provides ongoing guidance on data processing, transparency, and accountability.

Such regulations ensure that AI deployment in industry respects legal boundaries and protects the rights of workers and citizens.

Risks of AI in Production: The Real Danger Is Not the Technology

When asked about risks, Phan states clearly that the danger does not lie in AI itself but in unreflective development. If organizations integrate AI tools without addressing ethical concerns, data protection requirements, or potential biases, the consequences can be harmful.

Typical risks associated with AI in production include:

-

Lack of transparency in algorithmic decisions

-

Misinterpretation of machine-generated insights

-

Overreliance on automated systems

-

Insufficient oversight during critical processes

-

Data misuse or unauthorized data collection

-

Systemic biases embedded in training data

These risks underscore the need for governance structures that monitor the implementation of AI systems and ensure their responsible use. Phan emphasizes that errors are normal during technological development, but problems arise when organizations ignore them or fail to evaluate long-term consequences.

Decision-Making: What Should AI Be Allowed to Do?

One of the central questions in AI ethics concerns the boundaries between machine and human decision-making. According to Phan, AI in production is most effective when used for technical, repetitive, or measurable tasks. Examples include:

-

Monitoring machine fatigue levels

-

Detecting anomalies

-

Predicting component failures

-

Stabilizing production quality

-

Optimizing energy usage

These applications relieve human workers from fatigue-inducing tasks and allow technical teams to focus on higher-level decision-making.

In contrast, decisions that significantly affect individuals, particularly in legal or moral contexts, should remain under human supervision. Machines excel at rational analysis but cannot understand human complexity or context. Therefore, AI in production should support—not replace—human judgment in sensitive areas.