AI-Powered Machine Data Acquisition: Save More Than DIY Ever Could

What if the sensor you install today could pay for itself within months? Modern manufacturing leaders are discovering that high-quality machine data—captured, cleaned and interpreted by AI—delivers faster gains than ad-hoc DIY wiring and spreadsheets. The difference between noisy, incomplete logs and curated machine data is productivity: clearer alerts, fewer false positives, and faster root-cause fixes. For German Mittelstand teams, the question is not whether to collect signals but how to collect them reliably so analytics can act.

Why DIY Data Fails

Many small and medium-sized factories try to scrape together machine data through PLC taps, manual recording and Excel. That approach often produces partial datasets that miss critical events like micro-stops or energy spikes. Without synchronized timestamps, correlating a spindle stall to a downstream reject becomes guesswork. These gaps create blind spots for both operators and analysts and make it hard to measure true OEE.

Machine data quality matters because analytics and AI depend on consistent inputs. Missing cycles, inconsistent sampling rates and protocol mismatches cause models to underperform or to discard data entirely. Moreover, DIY systems frequently lack edge preprocessing, security controls and standardized schemas—making scaling expensive and fragile. The hidden maintenance costs—custom scripts, connector updates, and Excel workarounds—often exceed the initial hardware savings within a year.

Real-Time Machine Data

Real-time machine data acquisition captures events the moment they happen, which turns reactive maintenance into proactive action. According to Deloitte’s 2025 Smart Manufacturing survey, respondents reported a typical net impact of 10–20% improvement in production output after deploying smart manufacturing tools, and faster anomaly detection was a key driver (Deloitte, 2025).

Real-time capture eliminates the lag between a machine fault and human awareness. Consider a CNC line where a coolant pump intermittently loses pressure for two seconds every few hours. A manual log will miss these micro-failures; an AVA Sensor-style retrofit with onboard preprocessing will flag the pattern, enabling a timed preventive maintenance step before the spindle overheats. That small intervention can prevent expensive scrapped parts and avoid an unplanned shift loss.

Real-time feeds also enable closed-loop automation. For example, when a lathe reports a rising vibration signature, a supervisory controller can automatically reduce feed speed to avoid rejects while alerting maintenance. That split-second coordination is impossible with hourly manual checks and creates measurable reductions in scrap and rework. Over a quarter, these small reductions in scrap add up to meaningful yield improvements and lower labor costs.

Retrofitting Wins

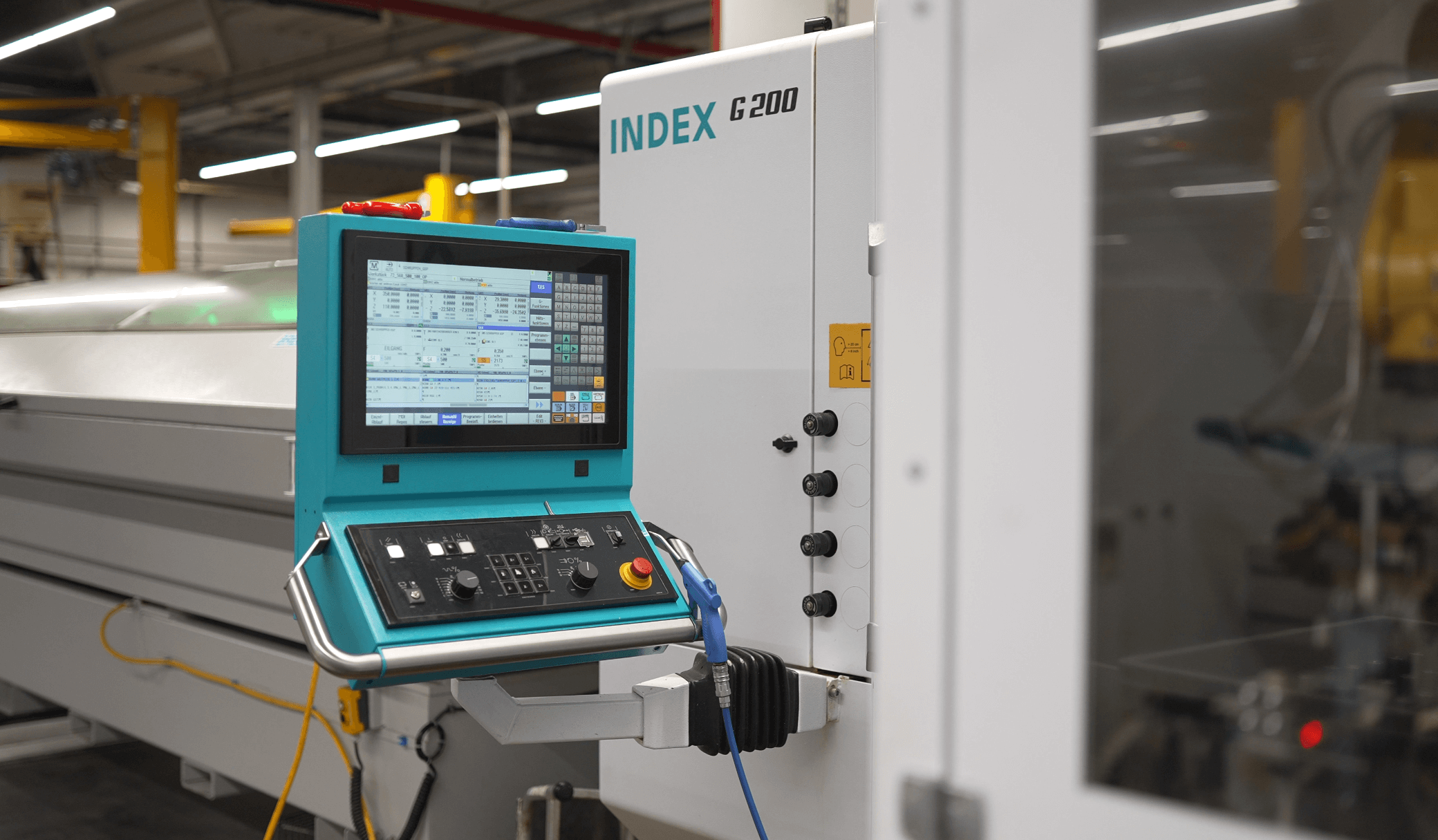

Retrofitting is the pragmatic path for Mittelstand plants that cannot replace equipment. Modern retrofits are machine-agnostic: they attach to legacy controllers, read electrical signatures, and add edge compute. Case studies, including internal implementations, show this approach can uplift OEE dramatically; some projects have doubled OEE from roughly 30% to 60% by eliminating hidden downtime and optimizing cycle times.

Retrofitting preserves operations and reduces implementation risk. Instead of multi-week PLC migrations, sensor modules can be installed during planned shifts and begin streaming validated machine data within hours. The faster capture is standardized, the sooner analytics can find bottlenecks such as an underperforming press that causes queueing downstream. For many small shops, that speed of deployment is the difference between a stalled project and a visible return on investment.

2.1 Practical Example

A German sheet-metal shop retrofitted 12 presses with AI-enabled sensor modules and saw a 25% reduction in unplanned stops within the first three months. The retrofit captured torque variations that the shop’s PLCs did not report, enabling technicians to replace worn bearings before catastrophic failure. Beyond reduced stops, the shop reported improved scheduling accuracy because cycle-time variance dropped by about 15%.

2.2 Pilot Design

Designing a retrofit pilot should be simple and measurable. Start with a single line with known pain points, define baseline KPIs (OEE, MTTR, energy per part), and select a short measurement window—60–90 days. Prioritize lines where quick wins are visible so stakeholders stay engaged. Include a control batch to compare before-and-after performance and ensure the observed improvements are attributable to the retrofit, not seasonal variation.

During the pilot, ensure edge preprocessing and local dashboards are in place so technicians can act on insights immediately. A pilot that delivers clear reduction in stops or energy use within the trial period builds the case for scaling across the plant and secures budget for additional nodes.

Machine Data Quality

More data does not automatically equal better insights. Curated, synchronized, and preprocessed machine data improves model accuracy and reduces false alerts. McKinsey and other analysts note that digital performance management initiatives often deliver double-digit improvements in throughput while reducing downtime; the key is feeding algorithms reliable inputs.

Edge preprocessing solves many quality issues: it normalizes sampling rates, labels events, filters noise and encrypts sensitive streams locally. That local processing is especially important for European manufacturers concerned about data sovereignty and latency, because it avoids sending raw streams to third-party clouds where compliance and delay can be problematic. By pre-cleaning data at the edge, models receive standardized inputs that require less retraining and produce more actionable predictions.

High-quality feeds also reduce analyst time. Rather than spending days cleaning logs from disparate machines, engineers can focus on root-cause analysis and process changes. The result is faster cycle-time optimization and more effective continuous improvement loops, where shopfloor changes are validated by data within days rather than weeks.

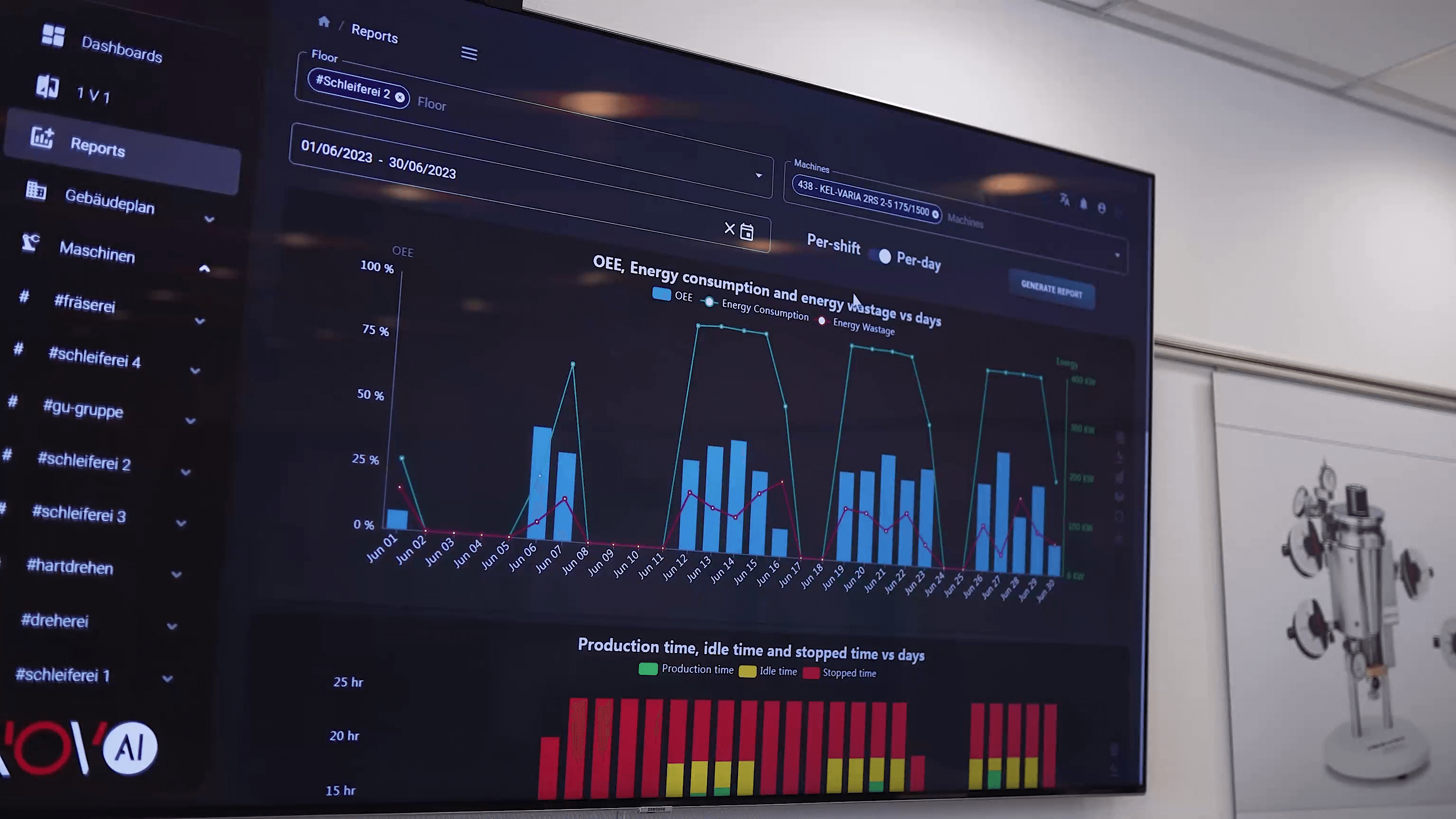

Energy and Bottlenecks

Energy consumption tracking tied to machine data delivers fast savings. When energy spikes are correlated with production states, teams can shift heating cycles or reduce idle energy. Industry summaries show digital strategies can reduce machine downtime by 30–50% and improve throughput by 10–30% when paired with effective analytics (StartUs Insights; McKinsey summaries).

Identifying bottlenecks requires synchronized machine data across lines. When a packaging station underperforms by two cycles per minute, upstream lines must stall or buffer. High-fidelity machine data makes those choke points visible and measurable so targeted actions—additional shift staffing, small mechanical changes, or sequence changes—produce measurable gains. Fixing a single bottleneck that increases throughput by 5–10% can have the same effect as adding a second shift without hiring more staff.

Bottleneck management is often the quickest route to ROI. The Pareto principle frequently applies: a few machines cause most of the line losses. Target those machines first and use data to validate fixes before wider rollout.

Security and ROI

Data security and compliance are as important as signal quality. For many European manufacturers, local processing and encryption reduce legal risk and maintain operational control. Deloitte’s 2025 survey reports that 78% of leaders are allocating more than 20% of their improvement budgets toward smart manufacturing, suggesting that security and compliance are being budgeted alongside capability (Deloitte, 2025).

Beyond legal considerations, security impacts cost. A secure, standardized retrofit lowers the cost of ownership because it reduces time spent on debugging custom integrations and ad-hoc scripts. Rather than each machine having bespoke connectors and Excel macros, a standardized sensor and edge stack creates repeatable deployments that are cheaper to maintain over time. That repeatability also shortens training cycles for new technicians and improves uptime consistency plant-wide.

3 Steps to Scale

- Standardize the sensor and edge stack across a cell to simplify maintenance.

- Measure baseline KPIs and agree on short-term goals (30–90 days) to show quick wins.

- Formalize handover procedures so insights lead to action—log repairs, update SOPs, and assign ownership.

Scaling also requires change management. Train technicians to trust alerts, include operations in model tuning, and document decisions. When the maintenance team understands why a predictive alert matters and how to act, the value compounds: fewer false alarms, faster repairs and improved morale.

Why AI Beats DIY

AI applied to machine data extracts patterns humans miss: subtle drift, correlated events, and early-stage wear signatures. Off-the-shelf ML models can generate many false leads if fed raw, noisy data. Conversely, AI models trained on curated retrofitted feeds detect repeatable anomalies and prioritize interventions based on predicted downtime impact.

The practical benefits include predictive alerts that reduce mean time to repair, automated anomaly triage, and contextualized recommendations that guide technicians. Deloitte’s 2025 survey found many manufacturers reported improved output and reduced defects after adding smart capabilities, supporting the case for AI-driven approaches. These productivity gains translate to measurable business outcomes: fewer expedited shipments, lower scrap, and better on-time delivery rates.

There is a contrarian view: DIY can be cheaper upfront. But the hidden costs are often operational: maintenance of custom scripts, fragmented schemas, security gaps and missed events that lead to costly failures. Modern solutions like Novo AI's WatchMen platform streamline capture and analysis so teams focus on decisions not debugging.

Implementation Checklist

- Start with a pilot on the known problem line and define baseline KPIs.

- Use machine-agnostic retrofits to standardize capture quickly.

- Ensure edge preprocessing for data quality and sovereignty.

- Apply AI models to curated feeds and measure OEE before/after.

- Scale with focused objectives: energy, uptime, or throughput.

- Document operating procedures so insights turn into repeatable practices.

The Path Forward

Modern solutions like Novo AI's WatchMen platform simplify machine data capture and analysis, letting manufacturers focus on decisions rather than wiring and cleanup. The evidence is clear: structured, AI-ready machine data delivers faster, more reliable gains than piecemeal DIY systems and spreadsheets.

If your plant is still relying on manual logs and ad-hoc sensors, consider a targeted retrofit pilot that proves value inside 90 days. The right machine data strategy is not about collecting everything; it is about collecting the right, high-quality signals to make decisions that reduce downtime, save energy and increase OEE. Start small, scale fast.

References

- Deloitte: 2025 Smart Manufacturing and Operations Survey - Findings on output improvements and budgets (accessed: 2025-10-09)

- McKinsey: Transforming advanced manufacturing through Industry 4.0 - Examples of OEE improvements (accessed: 2025-10-09)

- IoT Analytics: Smart manufacturing learnings - Industry case studies and trends (accessed: 2025-10-09)

- Manufacturing Leadership Council: Digital transformation growth - Maturity and adoption stats (accessed: 2025-10-09)

- StartUs Insights: Digital transformation technologies - Data on downtime and throughput (accessed: 2025-10-09)